MATLAB

Introduction

MATLAB is a powerful scripting language and computational environment. It is designed for numerical computing, visualization and high-level programming and simulations. MATLAB also has parallel processing capabilities.

MATLAB is installed on all HPC compute and login nodes, and on all Spear nodes.

Limited Licenses

We maintain a limited number of licenses for MATLAB use at the RCC. Sometimes, all available licenses are in-use by other users and you must wait to checkout a license. To see how many licenses are available, you can run the following command from inside MATLAB:

license('checkout','MATLAB')

Non-interactive jobs submitted using the scripts shown in this page will check for available MATLAB licenses before running. However, if you want to use large number of MATLAB jobs simultaneously, use MATLAB Compiler as described below to create executables from your code. This will compile your MATLAB code to C code and avoid all license restrictions.

Versions

We have multiple versions of MATLAB available on our systems. The default is version 2018b, however we also have several other versions available via kernel modules. These can be loaded by using the following command:

module load matlab/VERSION

| Version | Load Command |

|---|---|

| 2020a | module load matlab/2020a |

| 2018b | module load matlab |

| 2017a | module load matlab/2017a |

| 2015b | module load matlab/2015b |

| 2014b | module load matlab/2014b |

| 2013b | module load matlab/2013b |

Interactively running MATLAB

You can work with MATLAB interactively on our servers, similar to how you would on your own workstation by using the Spear cluster. This is useful for running short jobs or testing/debugging production runs. You can run MATLAB on Spear by connecting to Spear, opening a terminal, and then executing the MATLAB program:

[user@spear-login.rcc.fsu.edu] $ module load matlab

[user@spear-login.rcc.fsu.edu] $ matlab

You can use the MATLAB Parallel Computing Toolbox (PCT) to utilize more than one core. To use any of the parallel features of MATLAB (such as parfor), there are to modes:

- pmode is an interactive mode where you see individual workers (labs) in a GUI

- parpool is the mode where the labs run in the background.

The maximum number of workers that can be used in either mode is limited to 8.

Using pmode interactively

Below is an example of how to invoke interactive pmode with four workers:

pmode start local 4

This will open a Parallel Command Window (PCW). The workers then receive commands entered in PCW (at the P>> prompt), process them, and send the command output back to the PCW. You can transfer variables between the MATLAB client and the workers. For example, to copy the variable x in Lab 2 to xc on the client, use:

pmode lab2client x 2 xc

Similiarly, to copy the variable xc on the client to the variable x on Lab 2, use:

pmode client2lab xc 2 x

You can perform plotting and all other operations from inside PCW.

You can distribute values among workers as well. For example, to distribute the array x among workers, use:

codistributed(x,'convert')

Use numlabs, labindex, labSend, labReceive, labProbe, labBroadcast, labBarrier functions similar to MPI commands for parallelizing. Please refer to MATLAB manual for a full discussion of commands. Entering the following command in the PCW will end the session and release the licenses for other users:

pmode quit

Interactive parallel computing using parpool

The matlabpool utility has been replaced by the parpool utility in current versions of MATLAB. The syntax for parpool is

parpool

parpool(poolsize)

parpool('profile',poolsize)

parpool('cluster',poolsize)

ph = parpool(...)

...where poosize, profile, and cluster are respectively the size of the MATLAB pool of workers and the profile or the cluster you created. The last line creates a handle ph for the pool.

parpool enables the full functionality of the parallel language features (parfor and spmd) in MATLAB by creating a special job on a pool of workers, and connecting the MATLAB client to the parallel pool.

The following example creates a pool of 4 workers, and runs a parfor-loop using this pool:

>>parpool(4)

>> parfor i = 1:10

feature getpid;

disp(ans)

end

1172

1172

1171

1171

1169

1169

1170

1172

1171

1169

>> delete(gcp)

The following example creates a pool of 4 workers, and runs a simple spmd code block using this pool:

>> ph = parpool('local',4) % ph is the handle of the pool

>> spmd

>> a = labindex

>> b = a.^2

>> end

Lab 1:

a = 1

b = 1

Lab 2:

a = 2

b = 4

Lab 3:

a = 3

b = 9

Lab 4:

a = 4

b = 16

>> delete(ph)

Note. You cannot simultaenously run more than one interactive parpool session. You must to delete your current parpool session before starting a new one. To delete the current session, use:

delete(gcp)

delete(ph)

...where gcp utility returns the current pool, and ph is the handle of the pool.

Non-interactive job submission

The following examples demonstrate how to submit MATLAB jobs to the HPC. You should already be familiar with how to connect and submit jobs in order to submit MATLAB jobs.

A Note about MATLAB 2017a

You may notice several warning messages related to Java in the output files or on the terminal when running non-interactive jobs using MATLAB 2017a. While the cause of these warnings is not clear, they do not appear to cause any errors in the job runs themselves. For that reason, these warnings may be ignored.

Single core jobs

The following example is a sample submit script (test1.sh) to run the MATLAB program test1.m that uses a single core.

Note: test1.m should be a function, not a script. This can be easily done by simply enclosing your script with a dummy function.

#!/bin/bash

#SBATCH -N 1

#SBATCH --ntasks-per-node=1

#SBATCH -p genacc_q

#SBATCH -t 01:00:00 #Change the walltime as necessary

module load matlab

matlab -nosplash -nojvm -nodesktop -r "test1; exit"

Then, you can submit your job to Slurm normally:

sbatch test1.sh

Note: The Parallel Computing Toolkit (PCT) cannot be used within your function; you cannot use parfor or any other command that utilizes more than one core.

Multiple Core Jobs

You can submit MATLAB jobs to run on multiple cores by changing the ppn value in the MATLAB submit script or by using parpool with the -n parameter adjusted in the MATLAB Slurm Submit Script. The maximum number of cores available for any single job is currently eight. The earlier matlabpool utility has been deprecated and removed in recent versions. For current versions of MATLAB, you will need to modify your MATLAB code to include the following lines:

n_cores = num2str(getenv('SLURM_NTASKS'));

pool = parpool('local', n_cores);

... your matlab code should go here ...

delete(pool)

With these modifications, your code will now get the number of cores you requested in your Slurm submit script (from the -n parameter) and will create a parallel pool which has as many workers as the number of processes you have requested. Then, your code can take advantage of typical parallel computing constructs like parfor and others. When your job finishes, the last line will delete the parallel pool of workers before exiting.

Once your code has been modified, your submit script should be similar to the serial non-interactive job described above but with more tasks requested.

#!/bin/bash

#SBATCH -N 1

#SBATCH -n 4

#SBATCH -p genacc_q

#SBATCH -t 01:00:00 #Change the walltime as necessary

module load matlab

matlab -nosplash -nodesktop -r "test1; exit"

Then, you can submit your job to Slurm normally:

sbatch test1.sh

Multiple node jobs

Multiple node jobs require the MATLAB_DCS package. This is currently not available on the HPC. If your job needs access to MATLAB_DCS, please submit a ticket or consider rewriting your code to take advantage of MPI capabilities (C, C++, Fortran or Python).

Using the MATLAB Compiler

If you need to use a large number of simultaneous MATLAB workers, we advise that you compile your code into an executable. This allows you to write and test your code in MATLAB, and compile it to C when you are ready to run a production job. One major advantage of compiling your MATLAB code is that your job will not be restricted by license limitations.

You can use the MATLAB compiler, mcc, on Spear or any of the HPC login nodes in order to create a binary executable of the code. Be sure to compile in whichever environment (HPC or Spear) you intend to run the code.

To compile the non-parallel code test1.m, use the MATLAB command:

mcc -R -nodisplay -R -nojvm -R -nosplash -R -singleCompThread -m test1.m

Note: This works only with the matlab module, not the matlab_dcs module.

The above command will create the script run_test1.sh and the executable test1. A brief description of these compiler flags follows.

-R: Specifies runtime options, and must be used with the other runtime flags (nodisplay, nosplash, etc)

-R -nodisplay: Any functions that provide a display will be disabled

-R -nojvm: Disable the Java Virtual Machine

-R -nosplash: Starts MATLAB, but does not display the splash screen

-R -singleCompThread: Runs only a single thread in the runtime environment

-m: Generates a C binary (-p would generate a C++ binary)

After successful completion, mcc creates the following files:

- a binary file,

- a script to load necessary environment variables and run the binary,

- a readme.txt, and,

- a log file.

The binary file can be run via the generated script with the following command:

<full-path-to-script>run_test1.sh $MCCROOT <input-arguments>

You can place this command in your Slurm submit script in order to run it as part of an HPC job.

Note that input arguments will be interpreted as string values, so any code that utilizes these arguments must convert these strings to the correct data type.

One concern with this method of compiling and running a MATLAB program is that the binaries generated will contain all of the toolboxes available in the user's MATLAB environment, resulting in large binary files. To avoid this, use the -N compiler flag. This will remove all but essential toolboxes, and other tools or .m files required can be attached with the -a option. The recommended syntax for generating serial binaries is:

mcc -N -v -R -nodisplay -R -nojvm -R -nosplash -R -singleCompThread -m test1.m

In this example, the -v option enables verbose mode. To run a MATLAB program in parallel using the Parallel Computing Toolbox, add the -p distcomp option to the command line arguments,

mcc -N -v -p distcomp -m test1.m

To run this binary, you must provide a parallel profile (see Creating a Profile above):

# the following assuming you are using matlab r2018b,

# revise it to reflect the version you are using

./run_test1.sh $MCCROOT -mcruserdata ParallelProfile:/gpfs/research/software/matlab/r2018b/toolbox/distcomp/parallel.settings

In this example, the default local profile is used. This can be used in any SLURM submit script without any modification, and no MATLAB licenses will be used when the job runs.

The generation of binaries is the same for MATLAB Distributed Computing Engine jobs, but a different SLURM-aware profile needs to be provided. You should always used the supplied HPC profile for these types of jobs. See the section above on submitting paraellel MATLAB jobs to see what lines should be added to a MATLAB script when using the Distributed Computing Engine. The submit command for a distributed job is:

./run_test1.sh $MCCROOT -mcruserdata ParallelProfile:/gpfs/research/software/userfiles/hpc.settings

Using MATLAB with GPU Processors

MATLAB is capable of using GPUs to accelerate calculations. Most built-in functions have alternative GPU versions. In order to take advantage of GPUs, you will need to submit your jobs to the HPC backfill2, backfill or genacc_q partitions which currently have GPUs available. This will schedule your jobs to run on our GPU-enabled compute nodes.

You can try the following example, which is a mandelbrot program, f_mandelbrot:

function [mbset, t] = gpu_mandelbrot(niter, steps, xmin, xmax, ymin, ymax)

t0 = tic();

x = gpuArray.linspace(xmin, xmax, steps);

y = gpuArray.linspace(ymin, ymax, steps);

[xGrid,yGrid] = meshgrid(x, y);

c = xGrid + 1i * yGrid;

z = zeros(size(c));

mbset = zeros(size(c));

for ii = 1:niter

z = z.*z + c;

mbset(abs(z) > 2 & mbset == 0) = niter - ii;

end

t = toc(t0);

Run the program and display the results with the following commands:

[mandelSet, time] = gpu_mandelbrot(3600,100,-2,1,-1.5,1.5)

surface(mandelSet)

The arrays x and y are generated on the GPU, utilizing its massively parallel architecture, which also handles the remainder of the computations that involve these arrays.

Submitting HPC jobs from your own copy of MATLAB

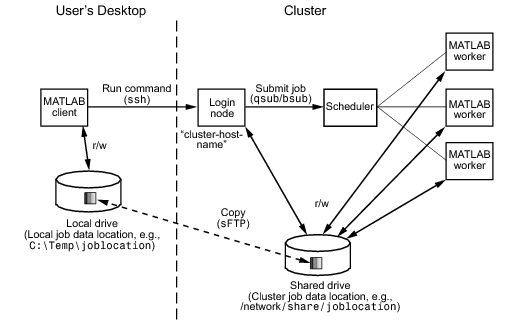

This section describes how to submit MATLAB jobs from your own workstation (desktop/laptop) to the HPC cluster. Use VPN if you are off campus.

The following figure illustrates the scheme for remote job submissions:

To submit a MATLAB job to the HPC cluster from your computer, a generic scheduler interface must be used. You must create a generic cluster object in MATLAB before you can submit jobs:

| Function | Description |

|---|---|

| CancelJobFcn | Function to run when cancelling job |

| CancelTaskFcn | Function to run when cancelling task |

| CommunicatingSubmitFcn | Function to run when submitting communicating job |

| DeleteJobFcn | Function to run when deleting job |

| DeleteTaskFcn | Function to run when deleting task |

| GetJobStateFcn | Function to run when querying job state |

| IndependentSubmitFcn | Function to run when submitting independent job |

We have provided these functions in the tarball matlab.tar located at:

/gpfs/research/software/userfiles/matlab.tar

These functions should be copied to:

[LOCAL_MATLAB_ROOT_DIRECTORY]/toolbox/local/

Substitute the path to MATLAB on your workstation for [LOCAL_MATLAB_ROOT_DIRECTORY]. You can use the following commands to download and extract the files (assuming you are in your MATLAB root directory) on your workstation:

$ scp you@hpc-login.rcc.fsu.edu:/gpfs/research/software/userfiles/matlab.tar .

$ tar -xvf matlab.tar

matlab/

matlab/communicatingSubmitFcn.m

matlab/independentSubmitFcn.m

matlab/getSubmitString.m

matlab/communicatingJobWrapper.sh

matlab/createSubmitScript.m

matlab/extractJobId.m

matlab/deleteJobFcn.m

matlab/getRemoteConnection.m

matlab/getJobStateFcn.m

matlab/independentJobWrapper.sh

matlab/getCluster.m

Next, you must setup PKI authentication (ssh-key-based instead of password) to the HPC. Refer to our "Using SSH Keys" documentation for how to do this. Make sure that you do NOT use a passphrase when setting up your keypair.

Now, move all the files except getCluster.m to the toolbox/local directory on your machine. For example, if you installed MATLAB to /usr/local directory, this can be done with:

mv matlab/!(getCluster.m) /usr/local/MATLAB/R2013b/toolbox/local/

Make sure the path is correct before you copy and that you have administrative privileges on your computer.

Next, update the `getCluster.m` file. matlab.tar provides several functions you need to create a generic cluster object. The function getCluster() use these functions to configure a cluster object for you.

Here is the content of the getCluster.m:

function [ cluster ] = getCluster(ppn, queue, rtime, LocalDataLocation, RemoteDataLocation)

%Find the path to id_rsa key file in YOUR system and update the following line

username = 'YOUR HPC USER NAME'

keyfile = '/home/USER/.ssh/id_rsa'; %Your actual path may be DIFFERENT!

%Do not change anything below this line

if (strcmp(username, 'YOUR HPC USER NAME') == 1)

disp('You need to put your RCC user name in line 4!')

return

end

if (exist(keyfile, 'file') == 0)

disp('Key file path does not exist. Did you configure password-less login to HPC?');

return

end

ClusterMatlabRoot = '/opt/matlab/current';

clusterHost='submit.hpc.fsu.edu';

cluster = parcluster('hpc');

set(cluster,'HasSharedFilesystem',false);

set(cluster,'JobStorageLocation',LocalDataLocation);

set(cluster,'OperatingSystem','unix');

set(cluster,'ClusterMatlabRoot',ClusterMatlabRoot);

set(cluster,'IndependentSubmitFcn',{@independentSubmitFcn,clusterHost, ...

RemoteDataLocation,username,keyfile,rtime,queue});

set(cluster,'CommunicatingSubmitFcn',{@communicatingSubmitFcn,clusterHost, ...

RemoteDataLocation,username,keyfile,rtime,queue,ppn});

set(cluster,'GetJobStateFcn',{@getJobStateFcn,username,keyfile});

set(cluster,'DeleteJobFcn',{@deleteJobFcn,username,keyfile});

The five input arguments of the function getCluster are

| Argument | Description |

|---|---|

| ppn | processor/core per node |

| queue | queue you want to submit job to (e.g., backfill, genacc_q) |

| rtime | wall time |

| LocalDataLocation | directory to store job data on your workstation |

| RemoteDataLocation | directory to store job data on your HPC disk space |

The first time you download, edit the getCluster.m file to include the following:

- your RCC user name in line 4, and

- the correct path to your id_rsa key file in line 5 (usually ~/.ssh/id_rsa)

Before you call this function, create a separate folder (to be used as "RemoteDataLocation" in the getCluster.m script) in your HPC disk space to store runtime MATLAB files, for example,

[user@hpc-login.rcc.fsu.edu] $ mkdir -p $HOME/matlab/work

Also create a folder in your workstation to be used as the LocalDataLocation. Clean these folders regularly after finishing jobs; they tend to fill up.

Submitting Jobs

The following lines can be used as a template to create a generic cluster object which you will use to submit jobs to HPC from your local copy of MATLAB:

processors = 4; % Number of processors used. MUST BE LESS THAN OR EQUAL TO 32

ppn = 4; % Number of cores used per processor

queue = 'genacc_q'; % Replace this with your choice of partition

time = '01:00:00'; % Run time

LocalDataLocation = ''; % Full path to the MATLAB job folder on your workstation (not the HPC)

% Full path to a MATLAB scratch folder on HPC (replace USER with your RCC username)

RemoteDataLocation = '/gpfs/home/USER/matlab/work';

cluster = getCluster(ppn, queue, time, LocalDataLocation, RemoteDataLocation);

The following is an example to create acommunicating jobfor thecluster:

j1 = createCommunicatingJob(cluster); % This example creates a communicating job (eg:parfor)

j1.AttachedFiles = {'testparfor2.m'}; % Send all scripts and data files needed for the job

set(j1, 'NumWorkersRange', [1 processors]); % Number of processors

set(j1, 'Name', 'Test'); % Give a name for your job

t1 = createTask(j1, @testparfor2, 1, {processors-1});

submit(j1);

%wait(j1); % MATLAB will wait for the completion of the job

%o=j1.fetchOutputs; % Collect outputs after job is done

Note. Only use the last two lines in this example for testing small jobs. Production jobs may wait in the HPC queue for a long time and will cause your copy of MATLAB to wait until the job has completed before being usable again. For production jobs, you should write data directly to the filesystem from your MATLAB script instead of collecting it when the script is complete.

The following is an example to create an independent job for the cluster:

j2 = createJob(cluster); % create an independent job

t2 = createTask(j2, @rand, 1, {{10,10},{10,10},{10,10},{10,10}}); % create an array of 4 tasks

submit(j2)

wait(j2)

o2 = fetchOutputs(j2) % fetch the results

o2{1:4} % display the results

Note. A communicating job contains only one task. However, this task can run on multiple workers. Also, the task can contain parfor-loop or spmd code block to improve the performance. Conversely, an independent job can contain multiple tasks. These tasks do not communicate with each other and each task runs on a single worker.

MATLAB Workshops

We regularly offer MATLAB workshops. Keep an eye on our event schedule or send us a message to find out when the next one will be. You can also check out our slides and materials from past workshops.